Tag Cloud

Currently Reading

Latest Book Reviews

- Certified Kubernetes Application Developer (CKAD) Study Guide, 2nd Edition Posted on January 11, 2025

- Rancher Deep Dive Posted on March 31, 2023

- Leveraging Kustomize for Kubernetes Manifests Posted on March 24, 2023

- Automating Workflows with GitHub Actions Posted on October 13, 2022

- Deep-Dive Terraform on Azure Posted on August 30, 2022 All Book Reviews

Latest Posts

- Apache Stress Testing Posted on March 25, 2013

- Apache: Installing mod_pagespeed on CentOS 6 Posted on March 19, 2013

- Apache: RedirectMatch Posted on March 17, 2013

- ZFS on Linux: Nagios check_zfs plugin Posted on March 16, 2013

- ZFS on Linux: Storage setup Posted on March 15, 2013

March 25, 2013

Apache Stress Testing

by Alpha01

As I didn’t have anything much better to do a Sunday afternoon, I wanted to get some benchmarks on my Apache VM that’s hosting my blog www.rubyninja.org. I’ve used the ab Apache benchmarking utility in the past to simulate high load on a server but have not used it on benchmarking Apache in detail.

My VM has a single shared Core i5-2415M 2.30GHz CPU with 1.5 GB of RAM allocated to it.

I based made my benchmarks using a total of 1000 requests with 5 concurrent requests at a time.

ab -n 1000 -c 5 http://www.rubyninja.org/index.php

Results

Using just the mod_pagespeed Apache module enabled.

Time taken for tests: 154.687976 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Non-2xx responses: 1000

Total transferred: 351000 bytes

HTML transferred: 0 bytes

Requests per second: 6.46 [#/sec] (mean)

Time per request: 773.440 [ms] (mean)

Time per request: 154.688 [ms] (mean, across all concurrent requests)

Transfer rate: 2.21 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 3

Processing: 328 772 46.4 772 1040

Waiting: 327 771 46.4 772 1040

Total: 328 772 46.4 772 1040

Using mod_pagespeed and APC enabled.

Time taken for tests: 41.355400 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Non-2xx responses: 1000

Total transferred: 351000 bytes

HTML transferred: 0 bytes

Requests per second: 24.18 [#/sec] (mean)

Time per request: 206.777 [ms] (mean)

Time per request: 41.355 [ms] (mean, across all concurrent requests)

Transfer rate: 8.27 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 6 134.1 0 3000

Processing: 88 199 28.4 202 459

Waiting: 88 199 28.4 201 459

Total: 88 205 137.2 202 3208

Using the WordPress W3 Total Cache plugin configured with Page, Database, Object, and Browser cache enabled the APC caching method and mod_pagespeed.

time taken for tests: 37.750269 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Non-2xx responses: 1000

Total transferred: 351000 bytes

HTML transferred: 0 bytes

Requests per second: 26.49 [#/sec] (mean)

Time per request: 188.751 [ms] (mean)

Time per request: 37.750 [ms] (mean, across all concurrent requests)

Transfer rate: 9.06 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 5 133.9 0 2996

Processing: 74 181 26.6 185 315

Waiting: 74 181 26.6 184 314

Total: 74 187 136.4 185 3178

As you can see, APC is the once caching method that makes a huge difference. Without APC, the server response time was just 6.46 requests per second and the load average peaked at about 12, while with the default APC configuration enabled, the server response time was 24.18 requests per second, with a load average peaking about 3. Adding the W3 Total Cache WordPress plugin helped performance slightly more, from 24.18 requests per second to 26.49 requests per second (load was about the same, including I/O activity). One interesting thing that I noticed is that with caching enabled, that is APC, the I/O usage spiked considerably. Most notably, MySQL was the high cpu usage process when doing the benchmarks. Since the caching is based in memory at this point it appears that the bottleneck in the web application is MySQL.

Tags: [apache php wordpress March 19, 2013

Apache: Installing mod_pagespeed on CentOS 6

by Alpha01

Error

rpm -ivh mod-pagespeed-stable_current_x86_64.rpm

warning: mod-pagespeed-stable_current_x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID 7fac5991: NOKEY

error: Failed dependencies:

at is needed by mod-pagespeed-stable-1.2.24.1-2581.x86_64

Fix

yum localinstall mod-pagespeed-stable_current_x86_64.rpm

apache centos March 17, 2013

Apache: RedirectMatch

by Alpha01

For the longest time, I’ve been using mod_rewrite for any type of URL redirect that requires any sort of pattern matching.

A few days ago I migrated my Gallery web app from https://www.rubysecurity.org/photos to http://photos.antoniobaltazar.com and I learned that the Redirect directive from mod_alias also has the RedirectMatch directive available, which essentially it’s Redirect with regular expression support.

I was able to easily setup the simple redirect using RedirectMatch instead of using mod_rewrite.

RedirectMatch 301 ^/photos(/)?$ http://photos.antoniobaltazar.com

RedirectMatch 301 /photos/(.*) http://photos.antoniobaltazar.com/$1

apache March 16, 2013

ZFS on Linux: Nagios check_zfs plugin

by Alpha01

To monitor my ZFS pool, of course I’m using Nagios, duh. Nagios Exchange provide a check_zfs plugin written in Perl. http://exchange.nagios.org/directory/Plugins/Operating-Systems/Solaris/check_zfs/details

Although the plugin was originally designed for Solaris and FreeBSD systems, I got it to work under my Linux system with very little modification. The code can be found on my SysAdmin-Scripts git repo on my GitHub account.

[root@backup ~]# su - nagios -c "/usr/local/nagios/libexec/check_zfs backups 3"

OK ZPOOL backups : ONLINE {Size:464G Used:11.1G Avail:453G Cap:2%} <sdb:ONLINE>

perl nagios zfs March 15, 2013

ZFS on Linux: Storage setup

by Alpha01

For my media storage, I’m using a 500GB 5400 RPM USB drive. Since my Linux ZFS backup server is a virtual machine under VirtualBox, in order for the VM to be able to access the entire USB drive completely, the VirtualBox Extension Pack add-on needs to be installed.

The VirtualBox Extension Pack for all versions can be found on the VirtualBox website. It is important that the Extension Pack installed must be for the same version as VirtualBox~

wget http://download.virtualbox.org/virtualbox/4.1.12/Oracle_VM_VirtualBox_Extension_Pack-4.1.12.vbox-extpack

VBoxManage extpack install Oracle_VM_VirtualBox_Extension_Pack-4.1.12.vbox-extpack

Additionally, it is also important that the user which VirtualBox will run under is member of the vboxusers group.

groups tony

tony : tony adm cdrom sudo dip plugdev lpadmin sambashare

sudo usermod -G adm,cdrom,sudo,dip,plugdev,lpadmin,sambashare,vboxusers tony

groups tony

tony : tony adm cdrom sudo dip plugdev lpadmin sambashare vboxusers

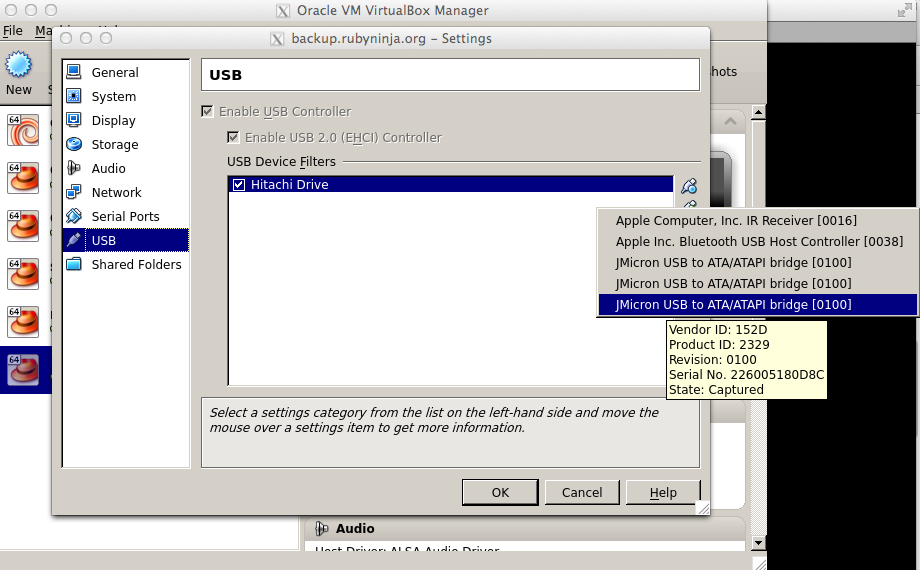

Since my computer is already using two other 500GB external USB drives, I had to properly identify the drive that I wanted to use for my ZFS data. This was a really simple process (I don’t give a flying fuck about sharing my drive’s serial).

sudo hdparm -I /dev/sdd|grep Serial

Serial Number: J2260051H80D8C

Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6; Revision: ATA8-AST T13 Project D1697 Revision 0b

Now that I know the serial number of the USB drive, I can configure my VirtualBox Linux ZFS server VM to automatically use the drive.

At this point I’m about to use the 500 GB hard drive as /dev/sdb under my Linux ZFS server and use it to create ZFS pools and file systems.

zpool create pool backups /dev/sdb

zfs create backups/dhcp

Since I haven’t used ZFS on Linux extensively before, I’m manually mounting my ZFS pool after a reboot.

root@backup ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

3.5G 1.6G 1.8G 47% /

tmpfs 1.5G 0 1.5G 0% /dev/shm

/dev/sda1 485M 67M 393M 15% /boot

[root@backup ~]# zpool import

pool: backups

id: 15563678275580781179

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

backups ONLINE

sdb ONLINE

[root@backup ~]# zpool import backups

[root@backup ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

3.5G 1.6G 1.8G 47% /

tmpfs 1.5G 0 1.5G 0% /dev/shm

/dev/sda1 485M 67M 393M 15% /boot

backups 446G 128K 446G 1% /backups

backups/afs 447G 975M 446G 1% /backups/afs

backups/afs2 447G 750M 446G 1% /backups/afs2

backups/bashninja 448G 1.4G 446G 1% /backups/bashninja

backups/debian 449G 2.5G 446G 1% /backups/debian

backups/dhcp 451G 4.4G 446G 1% /backups/dhcp

backups/macbookair 446G 128K 446G 1% /backups/macbookair

backups/monitor 447G 880M 446G 1% /backups/monitor

backups/monitor2 446G 128K 446G 1% /backups/monitor2

backups/rubyninja.net

446G 128K 446G 1% /backups/rubyninja.net

backups/rubysecurity 447G 372M 446G 1% /backups/rubysecurity

backups/solaris 446G 128K 446G 1% /backups/solaris

backups/ubuntu 446G 128K 446G 1% /backups/ubuntu

ubuntu centos virtualbox zfs