Tag Cloud

Currently Reading

Latest Book Reviews

- Certified Kubernetes Application Developer (CKAD) Study Guide, 2nd Edition Posted on January 11, 2025

- Rancher Deep Dive Posted on March 31, 2023

- Leveraging Kustomize for Kubernetes Manifests Posted on March 24, 2023

- Automating Workflows with GitHub Actions Posted on October 13, 2022

- Deep-Dive Terraform on Azure Posted on August 30, 2022 All Book Reviews

Latest Posts

- Automatically Disable Different Jenkins Projects at Build Time Posted on March 16, 2019

- Accessing KVM Guest Using Virtual Serial Console Posted on November 10, 2018

- Nagios SSL Certificate Expiration Check Posted on November 4, 2018

- Log Varnish/proxy and Local Access Separately in Apache Posted on November 4, 2018

- Ubuntu 18.04 LTS + Systemd + Netplan = Annoyance Posted on October 28, 2018

March 16, 2019

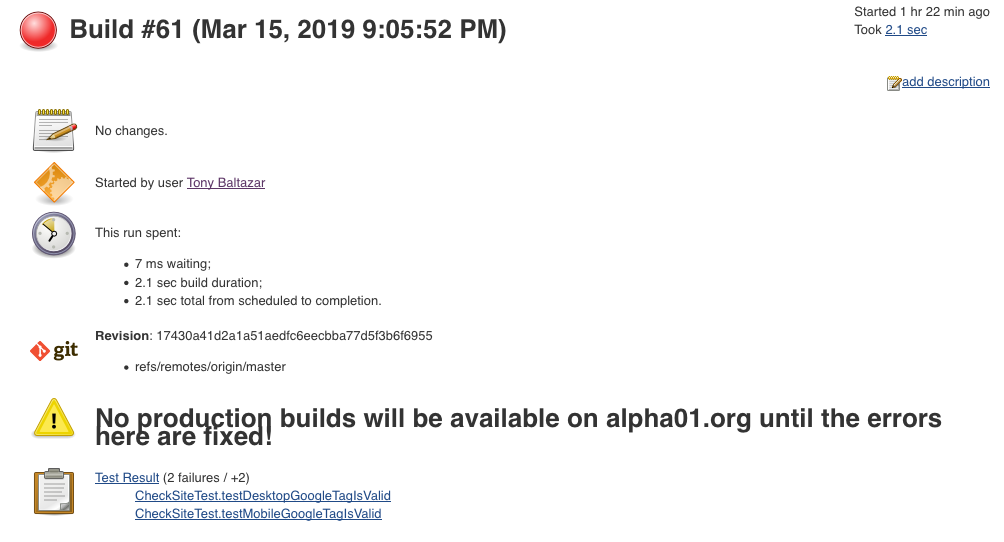

Automatically Disable Different Jenkins Projects at Build Time

by Alpha01

I use Jenkins as my CI tool for all my personal projects. My current Jenkins build plans are fairly simple and not quite particularly complex (though I do plan on eventually start using Jenkins pipelines on my build jobs in the near future), given that most of my personal projects are WordPress and Drupal sites.

My current configuration consists of two different basic Freestyle projects Jenkins builds. One for my staging build/job and the other for my production build/job respectively. Each time my staging Freestyle project builds, it automatically creates a git tag, which is later used by my production Freestyle project; where it’s pulled, build, and deploy from. This means that at no point I want my production Freestyle project to build whenever the corresponding staging Freestyle project fails (for example unit tests).

Using the Groovy Postbuild Plugin, will give you the ability to modify Jenkins itself. In my case, I want to disable the productions Freestyle project whenever my staging Freestyle project.

On this example my production project build/job is called rubysecurity.org.

import jenkins.*

import jenkins.model.*

String production_project = "rubysecurity.org";

try {

if (manager.build.result.isWorseThan(hudson.model.Result.SUCCESS)) {

Jenkins.instance.getItem(production_project).disable()

manager.listener.logger.println("Disabled ${production_project} build plan!");

manager.createSummary("warning.gif").appendText("<h1>No production builds will be available on ${production_project} until the errors here are fixed!</h1>", false, false, false, "red")

} else {

Jenkins.instance.getItem(production_project).enable();

manager.listener.logger.println("Enabled ${production_project} build plan.");

}

} catch (Exception ex) {

manager.listener.logger.println("Error disabling ${production_project}." + ex.getMessage());

}

The example Groovy Post-Build script ensures the project build/job rubysecurity.org is enabled if it successfully finishes without any errors, otherwise rubysecurity.org will be disabled, and a custom error message is displayed on the failing staging build/job.

Example error:

Resources

Tags: [jenkins November 10, 2018

Accessing KVM Guest Using Virtual Serial Console

by Alpha01

For the longest time, after creating my KVM guest virtual machines, I’ve only used virt-manager afterwards to do any sort of remote non-direct ssh connection. It wasn’t until now that I finally decided to start using the serial console feature of KVM, and I have to say, I kind of regret procrastinating on this, because this feature is really convenient.

Enabling serial console access to a guest VM is a relatively easy process.

In CentOS, it’s simply a matter of adding the following kernel parameter to GRUB_CMDLINE_LINUX in /etc/default/grub

console=ttyS0

After adding the console kernel parameter with the value of our virtual console’s device block file. Then we have to build new a grub menu and reboot:

grub2-mkconfig -o /boot/grub2/grub.cfg

Afterwards from the host system, you should be able to virsh console onto the guest VM.

The only caveat with connecting to a guest using the virtual serial console is existing the console. In my case, the way to log off the console connection was using Ctrl+5 keyboard keys. This disconnection quirk reminded me of the good old days when I actually worked on physical servers and used IPMI’s serial over network feature and it’s associated unique key combination to properly close the serial connection.

Resources

- https://www.certdepot.net/rhel7-access-virtual-machines-console/

- https://superuser.com/questions/637669/how-to-exit-a-virsh-console-connection

kvm November 4, 2018

Nagios SSL Certificate Expiration Check

by Alpha01

So, a while back I demonstrated a way to to set up an automated SSL certificate expiration monitoring solution.

Well, it turns out the check_http Nagios plugin has built-in support to monitor SSL certificate expiration as well. This is accomplished using the -C / --certificate options.

Example check on a local expired Let’s Encrypt Certificate:

[root@monitor plugins]# ./check_http -t 10 -H www.rubysecurity.org -I 192.168.1.61 -C 10

SSL CRITICAL - Certificate 'www.rubysecurity.org' expired on 2018-07-25 18:39 -0700/PDT.

check_http help doc:

-C, --certificate=INTEGER[,INTEGER]

Minimum number of days a certificate has to be valid. Port defaults to 443

(when this option is used the URL is not checked.)

CHECK CERTIFICATE: check_http -H www.verisign.com -C 30,14

When the certificate of 'www.verisign.com' is valid for more than 30 days,

a STATE_OK is returned. When the certificate is still valid, but for less than

30 days, but more than 14 days, a STATE_WARNING is returned.

A STATE_CRITICAL will be returned when certificate expires in less than 14 days

nagios November 4, 2018

Log Varnish/proxy and Local Access Separately in Apache

by Alpha01

I use Varnish on all of my web sites, with Apache as the backend web server. All Varnish traffic that hits my sites, is traffic that originates from the internet, while all access from my local home network hits Apache directly (Accomplished using local BIND authoritative servers).

For the longest time, I’ve been logging all direct Apache traffic and traffic originating from Varnish to the same Apache access file. It turns out, segmenting the access logs is a very easy task. This can be accomplish, with the help of environment variables in Apache using SetEnvIf.

For example, my Varnish server’s local IP is 192.168.1.150, and SetEnvIf can use Remote_Addr (IP address of the client making the request), as part of it’s set condition. So in my case, I can check if the originating request came from my Varnish server’s “192.168.1.150” address, if so set the is_proxied environment variable. Afterwards I can use the is_proxied environment variable to tell Apache where to log that access request too.

Inside my VirtualHost directive, the log configuration looks like this:

SetEnvIf Remote_Addr "192.168.1.150" is_proxied=1

ErrorLog /var/log/httpd/antoniobaltazar.com/error.log

CustomLog /var/log/httpd/antoniobaltazar.com/access.log cloudflare env=is_proxied

CustomLog /var/log/httpd/antoniobaltazar.com/access-local.log combined

Unfortunately, we can’t use this same technique to log the error logs separately as ErrorLog does not support this.

Tags: [apache varnish October 28, 2018

Ubuntu 18.04 LTS + Systemd + Netplan = Annoyance

by Alpha01

Unless it’s something that is suppose to help improve workflow, I really hate change; especially if the change involves changing something that worked perfectly fine.

I upgraded (fresh install) from Ubuntu Server LTS 12.04 to 18.04, among the addition of systemd, which I don’t mind to be honest, as I see it as necessary evil. I was shocked to see the old traditional Debian networking configuration does not work anymore. Instead, networking is handled by a new utility called Netplan. Using Netplan for normal static networking configurations is not terrible, however in my use-case, I needed to able to create a new virtual interface for the shared KVM bridge networking config needed for my guest VMs.

After about 30 minutes of trail and error (and wasn’t able to find any useful documentation), I opted to configure the networking config to continue using the old legacy networking config. The only problem is that reverting to my old 12.04 networking config was not quite as easy as simply copying over the old interfaces file. So I had to do the following:

1). Remove all of the configs on /etc/netplan/

rm /etc/netplan/*.yml

2). Install ifupdown utility

sudo apt install ifupdown

Now, populate your /etc/network/interfaces config. This is how mine looks (where eno1 is my physical interface):

# ifupdown has been replaced by netplan(5) on this system. See

# /etc/netplan for current configuration.

# To re-enable ifupdown on this system, you can run:

# sudo apt install ifupdown

#

auto lo

iface lo inet loopback

auto br0

iface br0 inet static

address 192.168.1.25

netmask 255.255.255.0

dns-nameservers 8.8.8.8 192.168.1.10 192.168.1.11

gateway 192.168.1.1

# set static route for LAN

post-up route add -net 192.168.0.0 netmask 255.255.255.0 gw 192.168.1.1

bridge_ports eno1

bridge_stp off

bridge_fd 0

bridge_maxwait 0

After restarting the network service, my new shared interface was successfully created with the proper IP Address and routing, however DNS was not configured. This is because now DNS configurations seem to have their own dedicated tool called systemd-resolved. So to get my static DNS configured and working on the half-ass networking legacy configuration. Using systemd-resolved is a two step process:

1). Update the file /etc/systemd/resolved.conf with the corresponding DNS configuration, in my case it looks like this:

[Resolve]

DNS=192.168.1.10

DNS=192.168.1.11

DNS=8.8.8.8

Domains=rubyninja.org

2). Then finally restart the systemd-resolved service.

systemctl restart systemd-resolved

You can verify the DNS config using

systemd-resolve --status

It wasn’t easy as I first imagined, but thus said, this was the only inconvenience during my entire 12.04 to 18.04 upgrade.

Tags: [ubuntu systemd networking